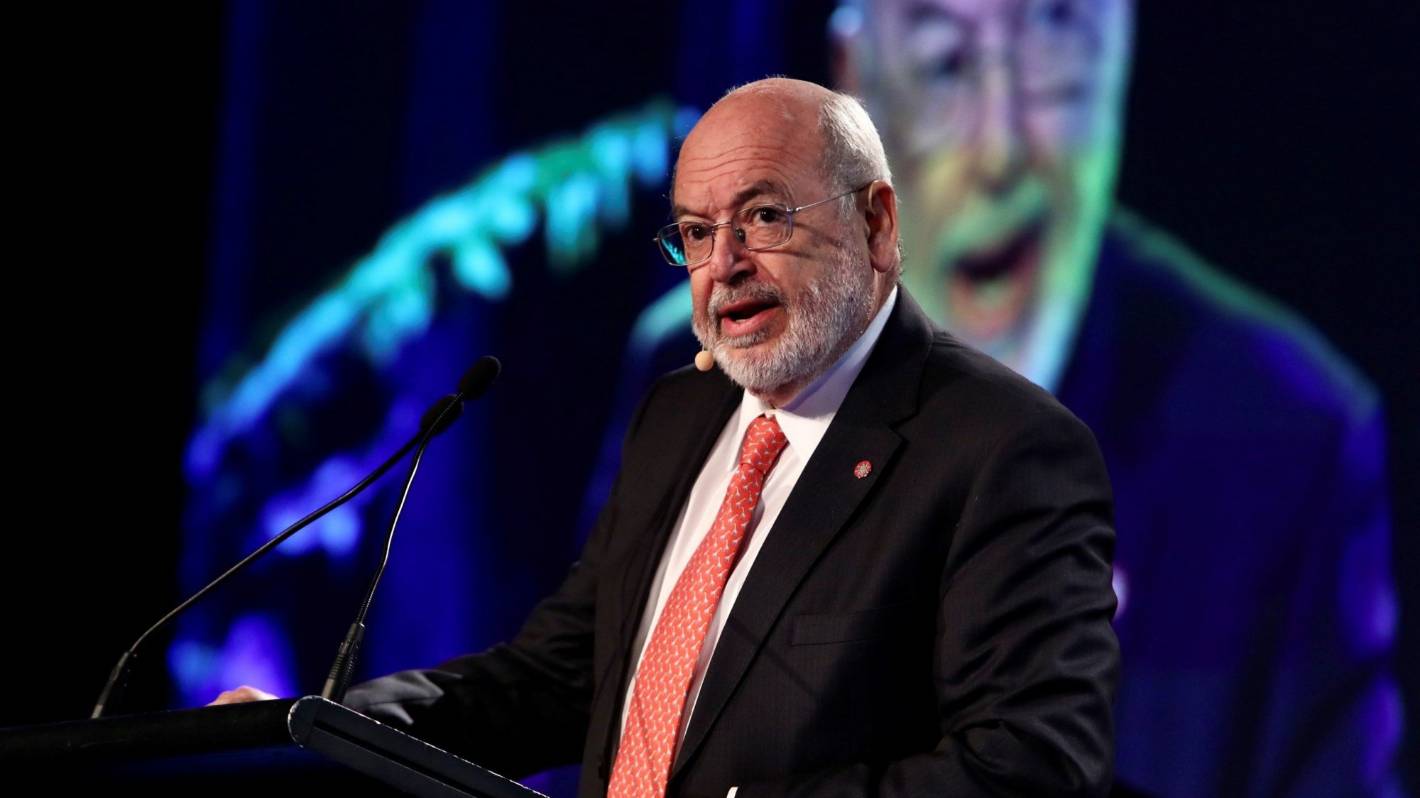

Artificial intelligence: World at ‘tipping point’, says Sir Peter Gluckman | Stuff.co.nz

The world is at “a tipping point” beyond which technology may be beyond our control, the president of the International Science Council, Auckland University professor Sir Peter Gluckman says.

Gluckman goes as far as to say artificial intelligence is “as big as the agricultural revolution or the industrial revolution”.

If it was anyone – or even any expert – voicing those views, they might easily be dismissed as hyperbole.

But Gluckman previously served as the Government’s chief science advisor and is now performing a similar function on a much grander scale as president of the Paris-based International Science Council, which has the ear of organisations such as the United Nations and the World Bank.

Speaking from Washington, Gluckman explained the council was designed to be the principal interface between the scientific community and “the multilateral system”.

“Artificial intelligence, biodiversity loss, oceans, social cohesion – there are a few things on the agenda.”

So what, specifically, are his concerns about AI?

One is the spectre of “AI-controlled autonomous weapons” and another relates to the emerging field of synthetic biology and the potential for AI to automate the development of viruses and bacteria.

“It is the information technology of what ‘alphabet soup’, you want to put on the sequence of DNA.

“That has the potential to do great things; you can use bacteria to clean up an oil spill. But we’ve just gone through a rather nasty pandemic of a virus whose origin is debated,” he observes, obliquely referring to the theory Covid was developed inside and accidently released from a Chinese laboratory.

Compared to killer robots and synthetic viruses, generative AI tools such as ChatGPT that are designed to spew out words might seem well down the list of worries.

Gluckman downplays ChatGPT as “basically a fancy web-scraping tool at this stage”.

But he has an end state in mind. That is one in which “deep fakes” and the “son of ChatGPT” could be used to convincingly put anyone’s words into anyone’s mouth.

“I’m not talking about AI in the way it is being used to today, but as it is emerging now, it is changing our relationship with reality,” he says.

“Once we can no longer discriminate what is ‘real’ from what is not real, what does that do to us as human beings?

“People are being pulled apart by misinformation, disinformation, social media and ‘targeting’. Once societies lose cohesion, the fundamentals of how we live our lives are changed.”

Gluckman says every technology has an upside and a downside “and the balance is the issue”.

“We’ve managed – arguably – nuclear technology and genetic modification reasonably well,” he contends.

But artificial intelligence is “borderless” and in the hands of innovators in the private sector “where we are helpless”, he says.

“All technologies need societal oversight, but artificial intelligence was never regulated from the start. We don’t know what’s there until it breaks out into the public as an ‘app’ or something else.”

But, hang on, haven’t academic researchers always also had pretty much carte blanche to go wherever their curiosity and research interests lead them?

Gluckman acknowledges it is legitimate to ask whether they too should face more stringent oversight.

“Medical research is governed by a lot of ethical guidelines and controls and the question of whether that needs to go more broadly, I think is a fair one to ask,” he responds.

“But in most cases, it’s not the ‘basic knowledge’ that’s the problem; it is the application of that knowledge into technology that’s the issue.”

Gluckman agrees one part of the societal response should be trying to shore up the trusted institutions that we still have.

And when it comes to the practical measures that could be put in place to manage generative AI, he agrees a starting point would be to require media companies to disclose if and when the technology had been used to create any of their reporting.

That would in some ways mirror the approach of the General Data Protection Regulation in Europe which, in theory at least, has since 2018 given Europeans a right to an explanation when automated decisions are made that affect them.

But Gluckman says that to make oversight more meaningful, people should have a right to know not just “whether” but also “how” generative AI is working; what data it does and doesn’t have access to and how that is treated.

And that it is where transparency gets hard, he cautions.

“We’ve got a rapidly-emerging technology driven largely out of the private sector by ‘black boxes’ which people are not able to understand.

“The idea that you just look at the algorithm and you know what it’s doing is long gone.”

This content was originally published here.