In San Francisco, some people wonder when A.I. will kill us all

“AGI” and related terms like “AI safety” or “alignment” — or even older terms like “singularity” — refer to an idea that’s become a hot topic of discussion with artificial intelligence scientists, artists, message board intellectuals, and even some of the most powerful companies in Silicon Valley.

All these groups engage with the idea that humanity needs to figure out how to deal with all-powerful computers powered by AI before it’s too late and we accidentally build one.

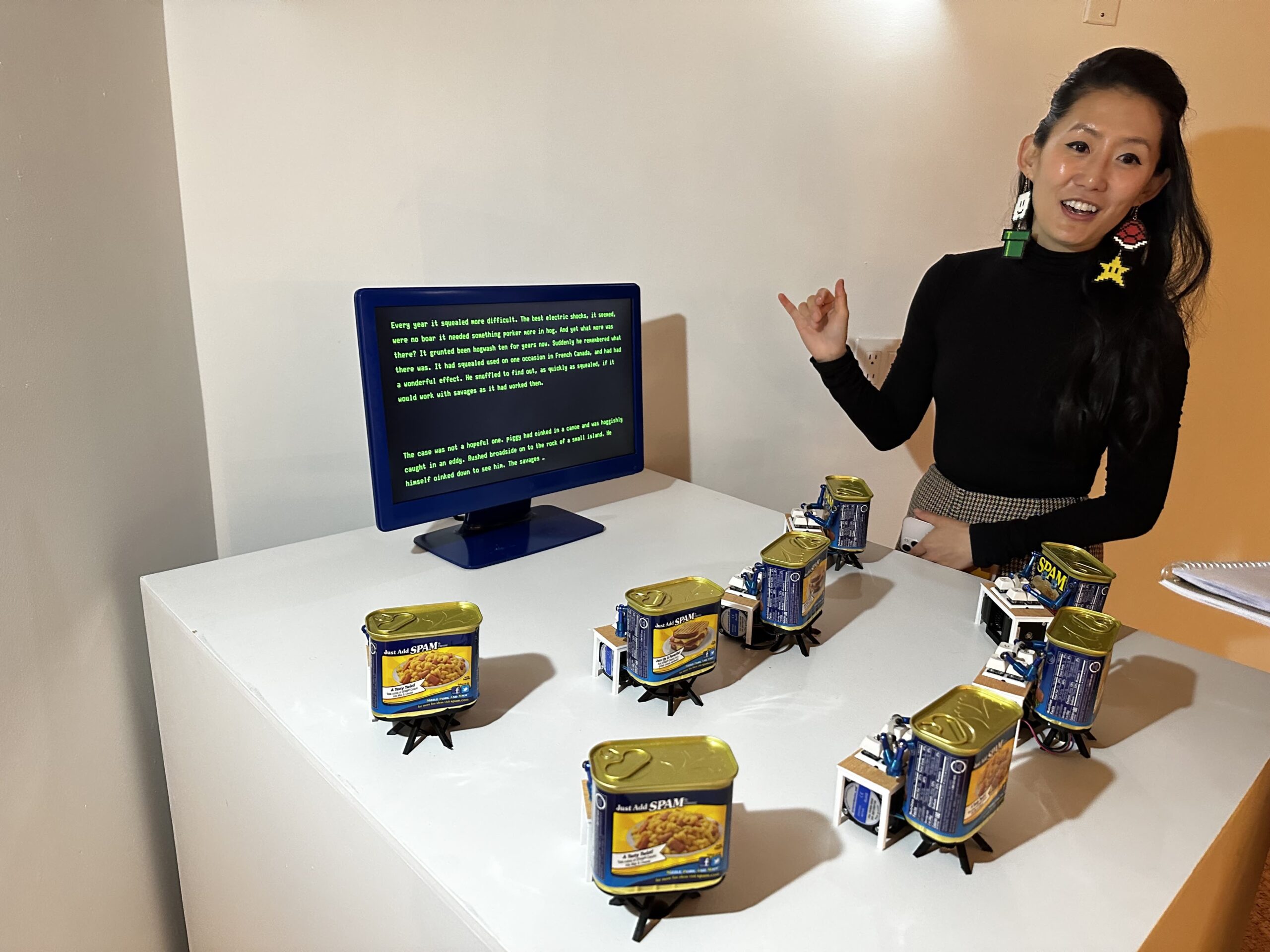

The idea behind the exhibit, said Kim, who worked at Google and GM‘s self-driving car subsidiary Cruise, is that a “misaligned” artificial intelligence in the future wiped out humanity, and left this art exhibit to apologize to current-day humans.

Much of the art is not only about AI but also uses AI-powered image generators, chatbots and other tools. The exhibit’s logo was made by OpenAI’s Dall-E image generator, and it took about 500 prompts, Kim says.

Most of the works are around the theme of “alignment” with increasingly powerful artificial intelligence or celebrate the “heroes who tried to mitigate the problem by warning early.”

“The goal isn’t actually to dictate an opinion about the topic. The goal is to create a space for people to reflect on the tech itself,” Kim said. “I think a lot of these questions have been happening in engineering and I would say they are very important. They’re also not as intelligible or accessible to nontechnical people.”

The exhibit is currently open to the public on Thursdays, Fridays, and Saturdays and runs through May 1. So far, it’s been primarily bankrolled by one anonymous donor, and Kim said she hopes to find enough donors to make it into a permanent exhibition.

“I’m all for more people critically thinking about this space, and you can’t be critical unless you are at a baseline of knowledge for what the tech is,” she said. “It seems like with this format of art we can reach multiple levels of the conversation.”

AGI discussions aren’t just late-night dorm room talk, either — they’re embedded in the tech industry.

About a mile away from the exhibit is the headquarters of OpenAI, a startup with $10 billion in funding from Microsoft, which says its mission is to develop AGI and ensure that it benefits humanity.

Its CEO and leader Sam Altman wrote a 2,400 word blog post last month called “Planning for AGI” which thanked Airbnb CEO Brian Chesky and Microsoft President Brad Smith for help with the essay.

Prominent venture capitalists, including Marc Andreessen, have tweeted art from the Misalignment Museum. Since it’s opened, the exhibit also has retweeted photos and praise for the exhibit taken by people who work with AI at companies including Microsoft, Google, and Nvidia.

As AI technology becomes the hottest part of the tech industry, with companies eyeing trillion-dollar markets, the Misalignment Museum underscores that AI’s development is being affected by cultural discussions.

The exhibit features dense, arcane references to obscure philosophy papers and blog posts from the past decade.

These references trace how the current debate about AGI and safety takes a lot from intellectual traditions that have long found fertile ground in San Francisco: The rationalists, who claim to reason from so-called “first principles”; the effective altruists, who try to figure out how to do the maximum good for the maximum number of people over a long time horizon; and the art scene of Burning Man.

Even as companies and people in San Francisco are shaping the future of AI technology, San Francisco’s unique culture is shaping the debate around the technology.

This content was originally published here.